The Azure Data Science VMs are good for dev and testing and even though you could use a virtual machine scale set, that is a heavy and costly solution.

When thinking about scaling, one good solution is to containerize the Anaconda / Python virtual environments and deploy them to Azure Kubernetes Service or better yet, Azure Container Apps, the new abstraction layer for Kubernetes that Azure provides.

Here is a quick way to create a container with Miniconda 3, Pandas and Jupyter Notebooks to interface with the environment. Here I also show how to deploy this single test container it to Azure Container Apps.

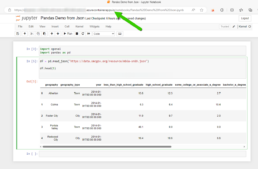

The result:

A Jupyter Notebook with Pandas Running on Azure Container Apps.

Container Build

If you know the libraries you need then it would make sense to start with the lightest base image which is Miniconda3, you can also deploy the Anaconda3 container but that one might have libraries you might never use that might create unnecessary vulnerabilities top remediate.

Miniconda 3: https://hub.docker.com/r/continuumio/miniconda3

Anaconda 3: https://hub.docker.com/r/continuumio/anaconda3

Below is a simple dockerfile to build a container with pandas, openAi and tensorflow libraries.

FROM continuumio/miniconda3

RUN conda install jupyter -y --quiet && \ mkdir -p /opt/notebooks

WORKDIR /opt/notebooks

RUN pip install pandas

RUN pip install openAI

RUN pip install tensorflow

CMD ["jupyter", "notebook", "--ip='*'", "--port=8888", "--no-browser", "--allow-root"]

Build and Push the Container

Now that you have the container built push it to your registry and deploy it on Azure Container Apps. I use Azure DevOps to get the job done.

Here’s the pipeline task:

- task: Docker@2

inputs:

containerRegistry: 'dockerRepo'

repository: 'm05tr0/jupycondaoai'

command: 'buildAndPush'

Dockerfile: 'dockerfile'

tags: |

$(Build.BuildId)

latest

Deploy to Azure ContainerApps

Deploying to Azure Container Apps was painless, after understanding the Azure DevOps task, since I can include my ingress configuration in the same step as the container. The only requirement I had to do was configure DNS in my environment. The DevOps task is well documented as well but here’s a link to their official docs.

Architecture / DNS: https://learn.microsoft.com/en-us/azure/container-apps/networking?tabs=azure-cli

Azure Container Apps Deploy Task : https://github.com/microsoft/azure-pipelines-tasks/blob/master/Tasks/AzureContainerAppsV1/README.md

A few things I’d like to point out is that you don’t have to provide a username and password for the container registry the task gets a token from az login. The resource group has to be the one where the Azure Container Apps environment lives, if not a new one will be created. The target port is where the container listens on, see the container build and the jupyter notebooks are pointing to port 8888. If you are using the Container Apps Environment with a private VNET, setting the ingress to external means that the VNET can get to it not outside traffic from the internet. Lastly I disable telemetry to stop reporting.

task: AzureContainerApps@1

inputs:

azureSubscription: 'IngDevOps(XXXXXXXXXXXXXXXXXXXX)'

acrName: 'idocr'

dockerfilePath: 'dockerfile'

imageToBuild: 'idocr.azurecr.io/m05tr0/jupycondaoai'

imageToDeploy: 'idocr.azurecr.io/m05tr0/jupycondaoai'

containerAppName: 'datasci'

resourceGroup: 'IDO-DataScience-Containers'

containerAppEnvironment: 'idoazconapps'

targetPort: '8888'

location: 'East US'

ingress: 'external'

disableTelemetry: true

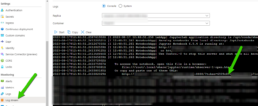

After deployment I had to get the token which was easy with the Log Stream feature under Monitoring. For a deployment of multiple Jupyter Notebooks it makes sense to use JupyterHub.